Comprehensive Reinforcement Learning Notes

Sharing my thesis-era RL notes covering everything from DP and TD to PPO, TD3, and SAC—plus open-source implementations to explore.

While working on my thesis, I kept detailed notes on the reinforcement learning stack—from foundational concepts to advanced, production-ready algorithms. I’m releasing them so anyone diving into RL can shortcut the learning curve.

📘 What's Inside the Notes

The two PDFs go wide and deep:

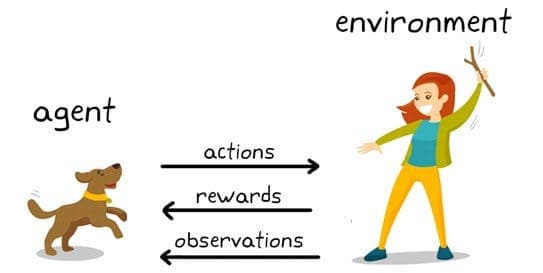

- Part 1: RL terminology, dynamic programming, Monte Carlo, temporal-difference learning, Deep SARSA, and other core building blocks.

Download Part 1 - Part 2: Modern policy and value-based methods including PPO, DDPG, A2C, TD3, SAC, and REINFORCE, with practical nuances I captured during experiments.

Download Part 2

Each chapter blends definitions, derivations, and heuristics that helped me debug and optimize real-world agents.

🧪 Code to Back It Up

To complement the theory, I also published tutorial-grade implementations of the algorithms covered in the notes. They live in this GitHub repo:

Reinforcement_Learning_Techniques Repository

The repo includes modular code for core RL loops, training configurations, and experiment logs—helpful if you want to reproduce benchmarks or build new agents on top.

Why Share This?

- Researchers can use the notes as a quick refresher before diving into papers.

- Engineers get a practical map for applying state-of-the-art RL in production.

- Students can follow the progression from tabular methods to deep actor-critic frameworks without missing key context.

If you end up using the notes or the repo, I’d love to hear what you build. RL is evolving fast—let’s keep sharing what works.