Inside JPMorgan's Investment Research Agent Architecture

A breakdown of JPMorgan's 'Ask David' multi-agent system and the lessons it offers for enterprise GenAI teams.

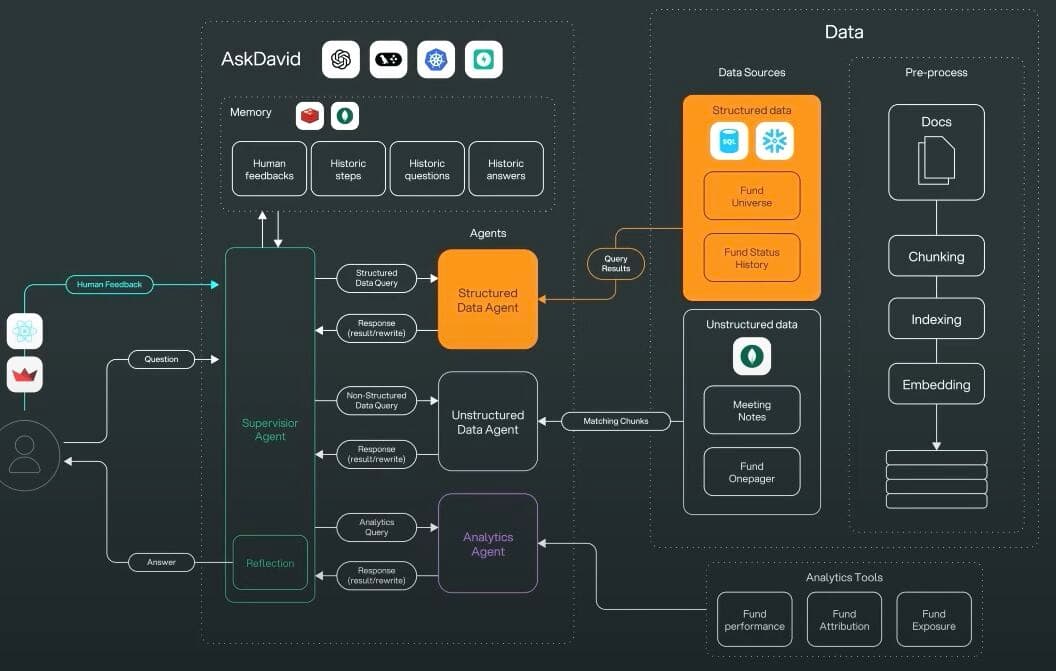

JPMorgan Chase recently shared a rare look at the investment research agent that powers “Ask David.” The diagram they presented at the LangChain // Interrupt conference has become one of my go-to blueprints for designing production-grade agentic systems.

From the way it orchestrates specialized agents to the emphasis on evaluation and human oversight, there is plenty to learn for anyone building serious GenAI tooling.

🧠 The Multi-Agent Architecture

At the center sits a supervisor agent that understands the user's intent, manages conversation state, and decides how to route work across dedicated sub-agents. Around it live tightly scoped specialists:

- Structured Data Agent — Translates natural language into SQL or API calls, then summarises results through an LLM layer.

- Unstructured Data Agent (RAG) — Retrieves insights from documents, emails, meeting notes, and even audio/video transcripts using Retrieval-Augmented Generation.

- Analytics Agent — Taps internal models and APIs, switching between a lightweight ReAct flow or text-to-code generation for deeper analysis.

This separation keeps each agent opinionated and manageable, while the supervisor coordinates the full reasoning chain.

🪢 Key Workflow Nodes

Two decision points keep the experience tailored and trustworthy:

- Personalization Node — Shapes the final answer based on user role (advisor vs. specialist), ensuring the response fits the audience.

- Reflection Node — An LLM judge validates completeness and accuracy. If confidence drops, the system loops back to rework the answer before the user ever sees it.

Together they form a feedback loop that balances speed with rigor.

💡 Three Lessons from Production

The JPMorgan team distilled their rollout into three principles worth adopting:

- Iterate fast, refactor often — Start with a simple ReAct agent, then layer in supervisors and specialists as you earn confidence. Shipping incremental improvements beats chasing the perfect architecture on day one.

- Evaluation-driven development — GenAI evaluation is an ongoing discipline. Define metrics beyond accuracy, test agents independently, and even lean on LLM judges to scale qualitative review.

- Keep humans in the loop — The last mile always needs subject-matter experts. When billions are on the line, escalation paths and SME review are essential.

Final Thoughts

Ask David proves that enterprise agents succeed when they combine tight orchestration, defensive design, and measurable outcomes. If you are exploring multi-agent coordination, keep this reference handy—and plan for a future where humans and agents collaborate, not compete.